By Sina Bari MD, AVP Healthcare and Life Sciences AI at iMerit Technology

Today, I want to discuss one of the most promising developments in medical imaging: foundation models in radiology.

The Data Quality Imperative in Radiological AI

I had an attending once say, “the solution to pollution is dilution” while washing out an infected would. I’ve wondered if the same applies to our challenges in AI. Can we overcome the ambiguities and subjectivity of data with volume? In radiology, this question becomes even more critical. I’ve led teams that meticulously annotate millions of imaging studies to ensure that our models capture the subtle variations that distinguish normal from pathological findings. What we’ve learned is that the more data you have the more nuance and subjectivity you can capture and the more robust the data is to error. Alternatively, small datasets can be extremely sensitive to quality. High volume of course can mean high cost.

The Data Quality Pipeline in Radiological

A[Raw Radiological Images] –> B[Expert Radiologist Annotation] B –> C[Quality Assurance Review] C –> D[Dataset Balancing] D –> E[Foundation Model Training]

What Makes Foundation Models Different?

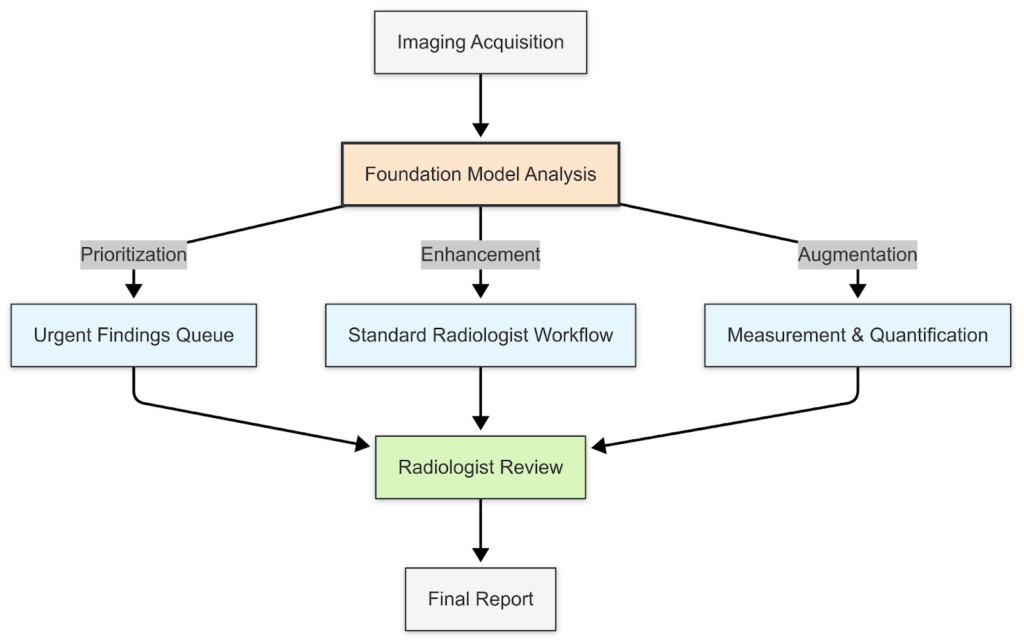

Unlike traditional machine learning approaches that require task-specific training, foundation models in radiology—much like their NLP counterparts—learn generalizable representations from vast imaging datasets before being fine-tuned for specific diagnostic tasks.

Foundation models offer three distinct advantages:

- Transfer Learning Efficiency: Models pre-trained on chest X-rays can be rapidly adapted to identify subtle fractures or pneumonia with minimal additional training.

- Multimodal Integration: Foundation models can simultaneously process radiographs, patient history, and laboratory values—mirroring how we as physicians think.

- Reduced Annotation Burden: These models can leverage self-supervised learning to extract patterns from large volumes of unlabeled images.

Can we have the best of both worlds?

How do we evaluate foundation models from a cost/benefit perspective. Well, foundation models can do what their name purports, serve as a foundation for building fine tuned models. At iMerit, my teams are building Fine Tuned models built on data pre-annotated by foundation models to improve accuracy with lower cost.

Looking ahead, I anticipate that these models will fundamentally transform workflow efficiency. At iMerit we’ve integrated with MLOps infrastructures processing terabytes of medical data daily, we’re already seeing foundation models reduce radiologist segmentation times by 22%-40% while improving precision.

I believe foundation models represent the most promising path toward augmented radiological intelligence. By addressing the data quality challenge, embracing collaborative implementation, and prioritizing ethical considerations, we can harness these powerful tools to improve efficiency while enhancing—never replacing—the irreplaceable human intelligence at the heart of medicine.

Sina Bari MD is Senior Director of Medical AI at iMerit Technology, where he leads a team developing advanced AI solutions for healthcare applications. With backgrounds in plastic surgery and medical technology, Dr. Bari bridges clinical expertise with AI innovation.